Introduction

In the ever-evolving world of artificial intelligence, reinforcement learning (RL) stands out as a powerful method for teaching agents to make decisions in complex environments. One of the intriguing challenges in the realm of RL is Gymnasium's Car Racing environment, a continuous control task that requires agents to navigate a race track efficiently. This environment poses unique challenges, including high-dimensional observation spaces and the need for precise control actions.

In this blog post, we dive into the exciting process of solving the Car Racing environment using three prominent RL algorithms: Soft Actor-Critic (SAC), Proximal Policy Optimization (PPO), and Deep Q-Network (DQN). Each of these algorithms has its own strengths and weaknesses, making them ideal candidates for a comparative study.

We'll explore the theoretical foundations of each algorithm, discuss their implementation, and analyze their performance in the Car Racing environment. By the end of this journey, you'll gain insights into the nuances of each approach and understand how they handle the challenges posed by continuous action spaces and dynamic environments. Whether you're a seasoned RL practitioner or a curious newcomer, this exploration promises to deepen your understanding of reinforcement learning and its application to complex control tasks.

Car Racing Environment & Reward

The CarRacing-v3 environment in Gymnasium is a continuous control task where the agent controls a car on a procedurally generated racetrack. The objective is to navigate the car around the track as efficiently as possible, minimizing time off-track and maximizing the number of tiles covered. The environment presents a challenging problem due to its continuous state and action spaces, requiring precise control to avoid going off the track.

The reward system in CarRacing-v3 primarily incentivizes the agent to stay on the track by awarding positive rewards proportional to the number of track tiles it covers. The typical reward for each tile is +1000/N, where N is the total number of tiles. If the car goes off the track, the agent stops receiving positive rewards, which indirectly penalizes the behavior. The episode ends if the car spends too much time off the track or successfully covers all the track tiles. This reward structure encourages the agent to drive smoothly, avoid time off-track, and maximize coverage of the racetrack.

Proximal Policy Optimization (PPO)

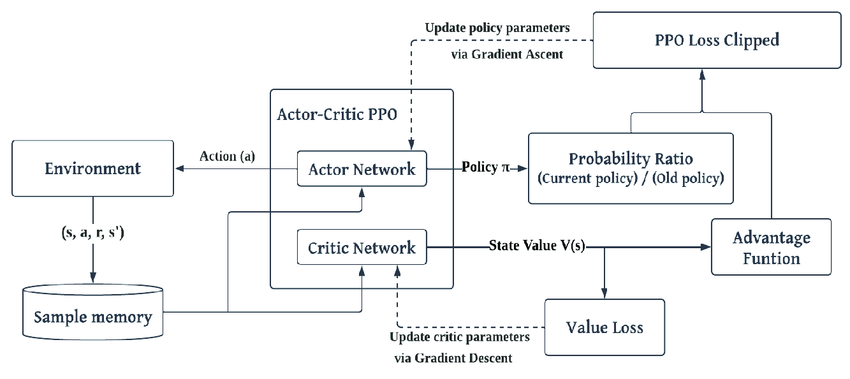

PPO is an on-policy algorithm that simplifies the trust region optimization used in algorithms like TRPO. It is particularly known for its stability and robustness in environments with continuous action spaces.

Implementation:

1import gymnasium as gym

2from stable_baselines3 import PPO

3

4# Create CarRacing environment

5env = gym.make('CarRacing-v3')

6

7# Initialize PPOmodel = PPO('CnnPolicy', env, verbose=1)

8

9# Train the model

10model.learn(total_timesteps=1000000)

11

12# Save the model

13model.save("ppo_car_racing")Performance in Car Racing:

- Strengths: PPO performs well in continuous action environments like Car Racing due to its ability to adjust policies incrementally, avoiding drastic changes that could destabilize training.

- Weaknesses: Being an on-policy algorithm, PPO may require more data compared to off-policy methods like SAC, making it less sample-efficient.

PPO in Practice:

In Car Racing, PPO effectively balances exploration and exploitation. The clipping mechanism in PPO prevents large policy updates, making it a reliable choice for environments where the control needs to be smooth and steady.

Soft Actor-Critic (SAC)

SAC is an off-policy algorithm that utilizes both entropy maximization and the actor-critic framework, promoting exploration by encouraging the agent to act as randomly as possible while learning.

Implementation:

1import gymnasium as gym

2from stable_baselines3 import SAC

3

4# Create CarRacing environment

5env = gym.make('CarRacing-v3')

6

7# Initialize SAC

8model = SAC('CnnPolicy', env, verbose=1)

9

10# Train the model

11model.learn(total_timesteps=1000000)

12

13# Save the model

14model.save("sac_car_racing")Performance in Car Racing:

- Strengths: SAC is highly sample-efficient, making it suitable for environments like Car Racing where actions are continuous and precise control is necessary.

- Weaknesses: The algorithm is more complex to implement and requires careful tuning, particularly of the temperature parameter, which balances exploration and exploitation.

SAC in Practice:

In the Car Racing environment, SAC's entropy-driven exploration helps the agent discover a variety of strategies and adapt to changes in the track, which is critical for navigating complex, high-dimensional spaces.

Deep Q-Network (DQN)

DQN is a pioneering algorithm in the RL domain, particularly effective in discrete action spaces. It uses a neural network to approximate the Q-values for action-value estimation.

Implementation:

1import gymnasium as gym

2from stable_baselines3 import DQN

3

4# Create CarRacing environment

5env = gym.make('CarRacing-v3')

6

7# Initialize DQN

8model = DQN('CnnPolicy', env, verbose=1)

9

10# Train the model

11model.learn(total_timesteps=1000000)

12

13# Save the model

14model.save("dqn_car_racing")Performance in Car Racing:

- Strengths: DQN is simple and effective in environments with discrete actions, with relatively lower computational demands.

- Weaknesses: It is less suited for continuous action spaces like Car Racing. The need for discretization of actions can lead to suboptimal policies and reduced performance.

DQN in Practice:

While DQN has achieved success in games like Atari, its performance in Car Racing is limited due to the environment's continuous nature. Modifications, such as discretizing the action space or hybrid approaches, are necessary but often lead to less optimal results.

Comparison of Algorithms

PPO:

- Strengths: Stable and performs well in environments with continuous action spaces due to its policy gradient approach.

- Weaknesses: As an on-policy algorithm, it can be less sample-efficient, requiring more interactions with the environment.

SAC:

- Strengths: Highly sample-efficient and excels in continuous action spaces. The use of entropy maximization leads to robust exploration.

- Weaknesses: More complex to implement and tune, especially with respect to the entropy coefficient.

DQN:

- Strengths: Simplicity and effectiveness in discrete action environments. Less computationally intensive than some policy-based methods.

- Weaknesses: Struggles in high-dimensional and continuous action spaces without significant modifications.

Conclusions

For the CarRacing environment, which has a continuous action space, PPO and SAC are more effective choices. SAC's sample efficiency and strong performance in continuous action environments make it particularly advantageous. On the other hand, DQN, although powerful in discrete action spaces, is less suited for this environment due to its inherent design limitations.

In summary, understanding the strengths and weaknesses of these algorithms provides valuable insights into their applicability to complex tasks like Car Racing. By carefully selecting and tuning the right algorithm, we can significantly enhance an agent's performance in challenging environments.

Additional Learning Materials

- Applying a Deep Q Network for OpenAI’s Car Racing Game

- Control CartRacing-v2 environment using DQN from scratch

- Gymnasium Documentation - Car Racing

- Solving Car Racing with Proximal Policy Optimization